The Core Problem: Why Your AI Chatbot Is Lying to You

In the executive suite, everyone is talking about generative AI. ChatGPT and Gemini are game-changers, but they come with a critical flaw: hallucination. Your AI might confidently tell a customer their order shipped last Tuesday, when in reality, it hasn't left the warehouse. Or, it might confidently reference a policy that was retired last year.

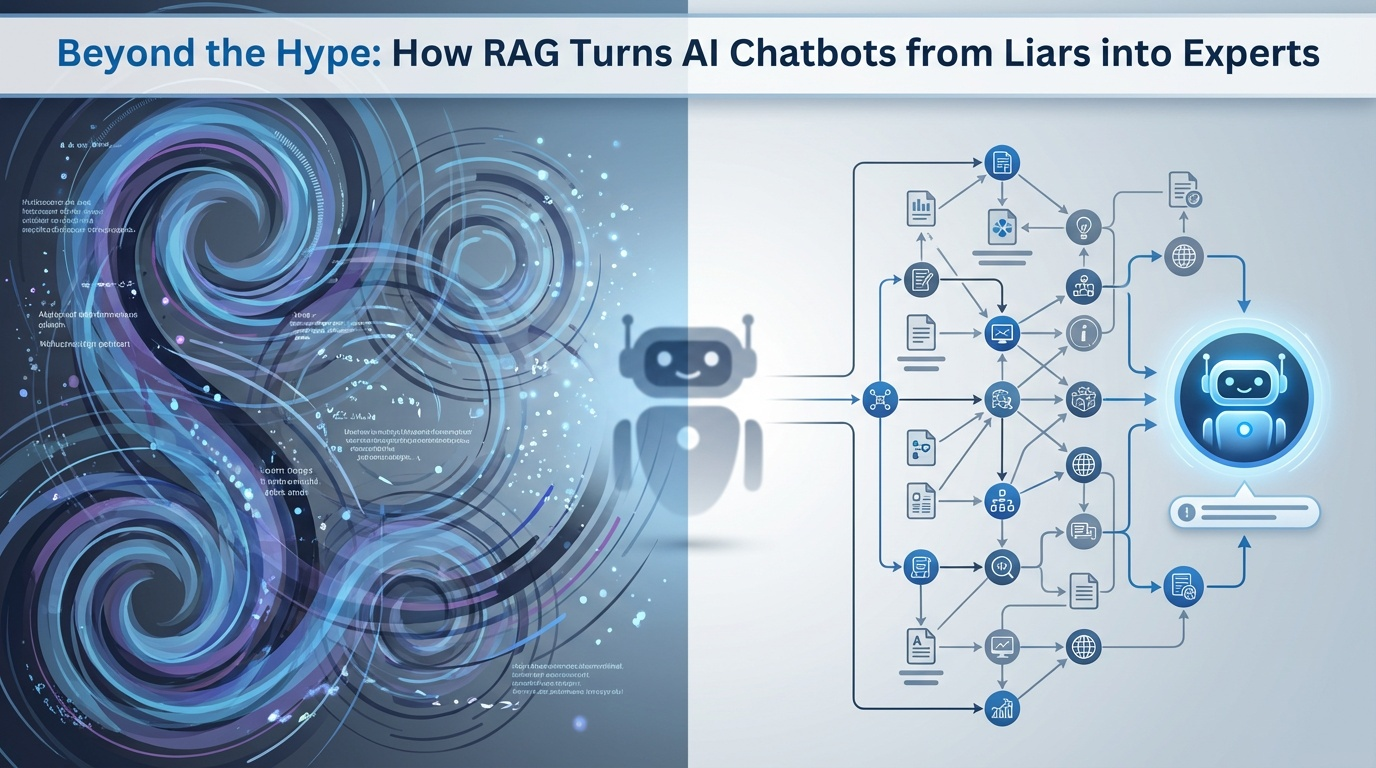

This failure isn’t malicious; it’s structural. Large Language Models (LLMs), the brains behind these chatbots, are trained on vast, static datasets. They are magnificent predictors of the next word, but they are not search engines. They rely solely on the general knowledge they were last trained on, which can be months or even years out of date.

For a Chief Product Officer or a CXO focused on accuracy and brand trust, this data decay and fabrication is unacceptable. The solution isn't a smarter LLM; it's a completely different architecture. The solution is Retrieval-Augmented Generation (RAG).

What is RAG? A Simple Explanation

Think of the standard LLM chatbot as a brilliant, well-read student who took their finals two years ago and has been locked in a room ever since.

RAG, or Retrieval-Augmented Generation, gives that brilliant student a real-time, personalized library and the instructions to use it before answering a question.

RAG is a two-step process that inserts proprietary, up-to-the-minute data into the AI's workflow:

- Retrieval (The Librarian): When a user asks a question, the RAG system first searches your company's own secure database, documentation, CRM records, or live API endpoints. It retrieves the most relevant, context-specific information, the ground truth.

- Augmentation and Generation (The Smart Student): The system then takes that retrieved, factual data and hands it to the LLM. It essentially tells the LLM: "Here is the exact information. Use this context to formulate your polite, helpful, and 100% accurate answer."

The LLM is no longer guessing based on stale public data; it is generating based on authenticated, proprietary facts.

The Business Value of RAG: Beyond Just "Fewer Mistakes"

For decision-makers, RAG is not a technical feature; it is a business enabler that directly impacts your bottom line and strategic goals.

| Business Benefit | The RAG Difference | Impact for CXOs |

|---|---|---|

| Eliminate Hallucination | The AI generates answers from verified, specific documents, not probabilistic general training data. | Reduces legal risk and customer service escalations due to false information. |

| Real-Time Knowledge | RAG connects to live APIs and operational databases, enabling instant updates to policy, inventory, or pricing. | Ensures accuracy in high-stakes transactions (e-commerce, financial services). |

| Proprietary Insight | Your company's unique institutional knowledge (patents, internal reports, client histories) becomes instantly accessible. | Drives efficiency for internal teams and provides a competitive, defensible advantage. |

| Traceability | RAG systems can display the specific document or source used to generate the answer. | Builds confidence and auditing trails, critical for regulated industries. |

Why This Matters Now: The Shift from General AI to Contextual AI

The race for top-performing LLMs is plateauing. The real differentiator in the market is no longer raw model size; it is contextual relevance.

Your competitors are launching generic, publicly trained chatbots that offer little more than basic assistance. By implementing RAG, your product, support, or knowledge base chatbot transforms into a proprietary, highly accurate expert.

The Key Messaging: RAG is the architecture that allows you to deploy AI at scale while safeguarding your brand integrity. It’s the difference between an assistant that thinks it knows your business and one that actually knows your business.